mlexps

Hello!

mlexps is essentially a collection of jupyter notebooks conducting machine learning

experiments (hence the name), with a focus on exploring strange setups, pushing neural networks to the

breaking point (and thus revealing their weaknesses), demystifying them and discussing

what can be improved.

The motivation

Neural networks are quite mysterious beasts. They converge most of the time, until

they don't. On some datasets, they do wonders and on others, they fall far behind stuff

like XGBoost or just straight up can't learn. What is the typical solution when one get

stuck? Read up on the literature, try out a bunch of things like lowering the learning

rate, changing network's size, using a different optimizer, etc. Basically, relying on

luck. In my view, this is completely unacceptable.

There need to be a clear, first-principles approach to figuring out why something failed.

Why did my electronic circuit fail? Let's run a quick simulation, grab the voltages, an

oscilloscope, cross check and figure out what went wrong. Why did my liquid rocket engine

explode? Let's grab the chamber pressure measurements, see if there is a green flash indicating

liquid copper or not, and rerun CFD workflows.

Likewise, why aren't people intercepting gradient flows (a lot of analysis around function-9-linear-vs-skip),

see if they're abnormally small or large. If someone did it earlier, Kaiming initilization

would have been discovered a lot sooner. Why aren't people trying to create a bunch of

networks with different hyperparameters, comparing performance and fit simple equations

describing relationships between hyperparams. If people do this more often, EfficientNet

and natural language scaling laws could have been discovered earlier.

Basically, lots of avenues, tiny unit tests and analysis techniques that would be very

valuable in debugging your networks, and yet most people rely on luck. Hopefully, this

will change soon, and these are my jupyter notebooks to hope drive that change.

Besides strange tests, I also experimented on a lot of architectures here, from classical

ones like MLP and CNNs, to GANs, VAEs, ViTs, Transformers, YOLO, graph networks, collaborative

filtering and diffusion models. I'd say it's pretty diverse. Over time, I explored a lot more

topics than just deep learning alone. This includes bioinformatics, molecular biology, quantum

chemistry, signal processing, electrical engineering, benchmarking, search engines, economy

and population simulations. I'm just really curious about how everything works.

Dependencies

These experiments usually heavily use k1lib,

a library I made that streamlines a lot of the analysis. Features include isolating

subnetwork, adding callbacks that execute at specific points in time, benchmarking

tools (measures time complexity, memory footprint, etc.) and in general lots of

quality-of-life tools. The cli submodule

in particular is quite powerful, as you can transform high dimensional irregular

data structures with relative ease. All of these notebooks are unit-tested for the

latest version of the library, to ensure consistency.

They also use k1a. This provides significant

acceleration to k1lib by compiling hot code down to C.

Site last compiled time: N/A

Compiled with k1lib version: N/A

Compiled with k1a version: N/A

Total notebooks: N/A

If what you're looking after is figuring out how PyTorch is implemented underneath, a

while ago I made these posts. More

information about myself can be found here.

Feel free to reach out regarding anything.

Interactive demos

Some notebooks have an interactive demo builtin that allows you to quickly explore what that

notebook is doing all inside a browser, so this is a collection of all of them. Sometimes the

server goes down, so I made a separate page tracking whether the demos

are up or not

Sections summary

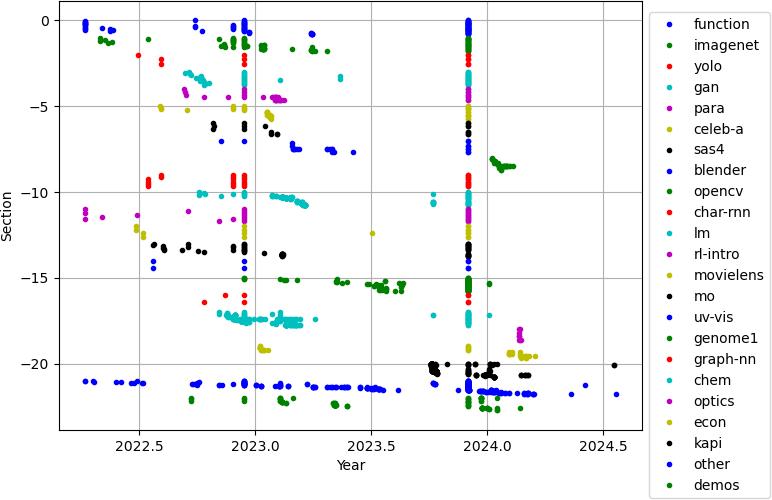

Timelines

I started out with "function" section, in which I just want to grasp the basics of how to build these networks,

how to scale them, how they behave, how to debug them and whatnot. Then, I experimented a lot with CNNs, in

sections such as "imagenet", "yolo", "gan", "celeb-a" and "sas-4". After that, I also experimented with attention, ViTs

transformers, in sections like "imagenet", "blender", "char-rnn" and "lm". Section "char-rnn" includes some of my

earliest work, and "lm" the latest work, with a lot of advanced experiments on text. Meanwhile, I was working

on non-DL-related content, particularly chemistry and biology related (sections "mo", "uv-vis", "genome", "chem"),

and did a lot of benchmarks in section "other". A quick timeline of all sections and notebooks:

The vertical line you see near the end of 2022 was when I started tracking the history of all notebooks, after

running integration tests on them and having to change minor things. but just changing something minor will change

the last updated time, which effectively erases history. I realized this and built a system to track them, but

the damage was done, hence the thick line.

Datasets

These are all datasets that are used, with some scouting/filtering notebooks and loading/training

notebooks available for each dataset, to navigate notebooks faster. Some of these are datasets I

collect on my own, and have not setup a system where you can just download it. Contact me at 157239q@gmail.com

if you want them.

Capabilities achieved

These are a list of capabilities/goals achieved on the specific notebook.

| Notebook | Description |

|---|---|

| function/1-bs-loss | Fit predefined function |

| imagenet/3-vanilla | Image classification |

| imagenet/7-tsne | t-SNE transformation |

| gan/4-style-transfer | Style transfer |

| gan/7-denoising | Image denoising |

| gan/8-progressive-deepening | Progressive deepening |

| gan/9-vae-interface | Image generation |

| celeb-a/3-lossF | Contrastive learning |

| sas4/2-reconstruct-scene | 2d scene reconstruction |

| char-rnn/3-vanilla | Word classification using attention |

| lm/2-classification-bow | Paragraph classification |

| lm/3-embedding | Word embeddings |

| movielens/2-memory | Recommender systems |

| mo/6-dist-matrix-recon | 3d structure reconstruction given distogram |

| chem/1-titration | Titration simulation |

| other/2-circuits | Circuit simulation |

| other/13-wikidata | Building search index |

| other/18-page-rank | Ranking pages |

Foundational/impressive notebooks

These are a list of important/impressive/information-dense notebooks, categorized into multiple types:

- Insight: really explores certain aspects of deep learning. Some provides really deep insights into things

- Correlation: try to deviate some hyperparameter, see the resulting performance, like scaling laws

- Grid scan: kinda like correlation, but more complicated/tests multiple hyperparameters at the same time

- Search interface: includes interactive interfaces that can search for a variety of data types

| Notebook | Type | Description |

|---|---|---|

| function/11-skip-really-deep | Insight | Very complex dynamics in super deep networks captured and analyzed |

| function/19-cripple | Insight | Cripples/attenuates network in the middle, see what happens |

| function/20-missing-chunks | Insight | Sometimes throw out entire layers, see if bypass completely |

| function/21-freeze-subnetwork | Insight | Freeze parts of the network, see if it can still extract signals |

| gan/2-function-vae | Insight/grid scan | Compression efficiency of variational autoencoders |

| gan/6-multi-agent | Insight | Tries to have multiple critics going against 1 generator in a GAN |

| gan/8-progressive-deepening | Insight | Tries to speed up training by slowly increasing input image resolution |

| gan/9-vae-interface | Insight | Controls a VAE, and built an interface to communicate with pretrained network |

| gan/10-vae-features | Insight | Tries to use a pretrained Vae |

| celeb-a/3-lossF | Insight | Solving unbalanced loss in face recognition |

| lm/2-classification-bow | Insight | Paragraph type classification using bag of words |

| rl-intro/6-temporal-difference | Insight | Temporal diference vs monte carlo |

| rl-intro/7-eligibility-traces | Insight/grid scan | Multiple lambdas for $$$TD(\lambda)$$$ |

| mo/3-cif-visualize | Insight | Scouts and visualize covid spike protein |

| mo/8-dist-matrix-recon-2 | Insight | Reconstructs 3d structure from distogram |

| other/2-circuits | Insight | How to avoid infinities in circuit simulations |

| function/7-deep-vs-shallow | Correlation | How long can MLP be? (30) |

| function/18-dataset-size | Correlation | How small can datasets be? (20 samples) |

| imagenet/6-bn-conv-relu-order | Correlation | What is the correct ordering of batch norm, convolution and relu? (CRB) |

| imagenet/13-double-descent | Correlation | Network's performance vs model size |

| other/7-nyquist-sampling | Correlation | Good audio sampling factor |

| other/9-request-throughput | Correlation | Webserver throughput |

| other/11-sql-throughput | Correlation | Sql server throughput |

| other/19-image-loading-throughput | Correlation | How to best load images from disk for training |

| function/13-skip-wide-depth | Grid scan | Width-depth tradeoff |

| imagenet/4-sizing | Grid scan | Scaling laws for network width |

| char-rnn/4-correction-factor | Grid scan | Post softmax attention correction factor |

| char-rnn/5-attn-sizing | Grid scan | Width-depth tradeoff |

| char-rnn/6-attn-head | Grid scan | How many attention heads are good? |

| movielens/3-k-means | Grid scan | k-means under increasing noise |

| genome/5-gene-ontology | Search interface | Gene ontology search |

| genome/6-sequence-ontology | Search interface | Sequence ontology search |

| other/13-wikidata | Search interface | Wikidata knowledge graph |